CodeBork | Tales from the Codeface

The coding blog of Alastair Smith, a software developer based in Cambridge, UK. Interested in DevOps, Azure, Kubernetes, .NET Core, and VueJS.

Project maintained by Hosted on GitHub Pages — Theme by mattgraham

-

How to start coding on Windows 10

Last year, as the pandemic started to bite, Paula put into practice an idea she’d had for a while: a community to support career-changing musicians into the software industry. A professional violinist herself, she completed Makers Academy in 2017, and started Musicoders to provide support to others like her. Over the last 11 months, it’s flourished into a diverse and friendly community of software-developing musicians, and has congratulated approximately five members as they’ve started their new careers in that time.

As a musical software developer myself, I’ve been lurking in the Slack team for much of its life. Something that has come up a few times of late is the question of setting up a development environment on Windows: many online bootcamps and starter programs assume or even require macOS or a Linux distribution such as Ubuntu, with nary a mention of Windows at all. I thought I’d try to fill that gap

For the purposes of this guide, I’m assuming you’re running Windows

10, and that it’s version 1903 (March 2019 Update) or higher. Find out how to

check your Windows version

here

and be sure to run Windows Update before you start this tutorial: it’s important

to keep Windows up-to-date to get the latest features and fixes to protect your

machine from The Bad Guys™.

For the purposes of this guide, I’m assuming you’re running Windows

10, and that it’s version 1903 (March 2019 Update) or higher. Find out how to

check your Windows version

here

and be sure to run Windows Update before you start this tutorial: it’s important

to keep Windows up-to-date to get the latest features and fixes to protect your

machine from The Bad Guys™. -

Snake-casing JSON requests and responses with ASP.NET Core and System.Text.Json

I’ve been working with ASP.NET Core and .NET Core for about 5 years now, and with the 3.0 release it really hit heights of maturity. I find it an enormously productive and performant framework, and exceptionally well-designed.

The 3.0 release introduced a new, high-performance library for working with JSON structures, superseding the community Newtonsoft.Json library for HTTP traffic. Even amongst the fanfare of its release, and an unusually-high first full release version, developers quickly start finding holes in the release, many of which were not resolved even in the .NET 5 release timeframe. Even today, there are ten pages of issues labelled for System.Text.Json on GitHub, and an epic amount of work scheduled on the library for .NET 6.

This isn’t to say the library is buggy, just that there are still holes in it compared with the mature Newtonsoft.Json package that has been serving ASP.NET developers well for the better part of two decades. One of the bugs that I’ve hit a couple of times in the last few months, along with many other developers judging by my hunting round the internet, is fully supporting snake casing in JSON property names,

like_so; out of the box, System.Text.Json supports onlyPascalCasing. There has been a custom naming policy implementation kicking around in the comments on that issue since August 2019, and updated in April 2020, but was it was sadly dropped for the .NET 5.0 release which shipped six months later, and so we’re left waiting until November 2021 for its release. This is a shame, as snake case formatting is so common in HTTP APIs across the web.Here is that implementation, courtesy of Soheil Alizadeh and jonathann92:

using System; using System.Text.Json; namespace System.Text.Json { public class SnakeCaseNamingPolicy : JsonNamingPolicy { // Implementation taken from // https://github.com/xsoheilalizadeh/SnakeCaseConversion/blob/master/SnakeCaseConversionBenchmark/SnakeCaseConventioneerBenchmark.cs#L49 // with the modification proposed here: // https://github.com/dotnet/runtime/issues/782#issuecomment-613805803 public override string ConvertName(string name) { int upperCaseLength = 0; for (int i = 1; i < name.Length; i++) { if (name[i] >= 'A' && name[i] <= 'Z') { upperCaseLength++; } } int bufferSize = name.Length + upperCaseLength; Span<char> buffer = new char[bufferSize]; int bufferPosition = 0; int namePosition = 0; while (bufferPosition < buffer.Length) { if (namePosition > 0 && name[namePosition] >= 'A' && name[namePosition] <= 'Z') { buffer[bufferPosition] = '_'; buffer[bufferPosition + 1] = char.ToLowerInvariant(name[namePosition]); bufferPosition += 2; namePosition++; continue; } buffer[bufferPosition] = char.ToLowerInvariant(name[namePosition]); bufferPosition++; namePosition++; } return buffer.ToString(); } } }You then enable this by modifying your

Startup.csas follows:public void ConfigureService(IServiceCollection services) { services .AddControllers() // or .AddControllersWithViews() .AddJsonOptions(options => { options.JsonSerializerOptions.DictionaryKeyPolicy = new SnakeCaseNamingPolicy(); options.JsonSerializerOptions.PropertyNamingPolicy = new SnakeCaseNamingPolicy(); }); }This gets you probably 90-95% of the way to supporting snake casing throughout your ASP.NET Core application, but the one place I’ve found it doesn’t support is in the property keys in a

ModelStateDictionaryreturned in aValidationProblemDetailsobject by ASP.NET Core’s model binder, even with theDictionaryKeyPolicyoption set:{ "title": "One or more validation errors occurred.", "status": 422, "errors": { "Email": ["The Email field is required."], "Mobile": ["The Mobile field is required."], "Address": ["The Address field is required."], "FirstName": ["The FirstName field is required."], "LastName": ["The LastName field is required."], "DateOfBirth": ["The DateOfBirth field is required."] } }Here, we would expect to see the property names with invalid values to have their names

snake_casedalso. Unfortunately this a bug in ASP.NET Core 5.0: as best I can tell, it should be applying the naming policy to the property name before writing it, but it isn’t. So here’s how we solved the problem.Following the documentation on writing a custom JsonConverter for .NET Core 3.1, I found the example on supporting Dictionaries with non-string keys wholly enlightening. It turns out that the correct type to derive from isn’t

JsonConverter<T>as I initially expected (and spiked), butJsonConverterFactory: this provides aCanConvert()method which can be overridden for our use case, as well as aCreateConverter()method to provide an instance of our custom converter. We separately derive fromJsonConverter<T>(the docs suggest as a private nested class of our Factory implementation) to provide the conversion logic itself. Here’s the skeleton of our customValidationProblemDetailsconverter:public class ValidationProblemDetailsJsonConverter : JsonConverterFactory { // We can happily convert ValidationProblemDetailsObjects public override bool CanConvert(Type typeToConvert) { return typeToConvert == typeof(ValidationProblemDetails); } // And they're pretty easy to create, too public override JsonConverter CreateConverter(Type typeToConvert, JsonSerializerOptions options) { return new ValidationProblemDetailsConverter(); } private class ValidationProblemDetailsConverter : JsonConverter<ValidationProblemDetails> { // The conversion implementation will go in here } }In the case of reading a

ValidationProblemDetailsobject, we can delegate to the built-in converter, as we don’t need or want to do anything special here. We do that by instantiating a newJsonSerializerOptionsand retrieving the converter from there:public override ValidationProblemDetails Read(ref Utf8JsonReader reader, Type typeToConvert, JsonSerializerOptions options) { // Use the built-in converter from the default // JsonSerializerOptions var converter = new JsonSerializerOptions() .GetConverter(typeof(ValidationProblemDetails)) as JsonConverter<ValidationProblemDetails>; return converter.Read(ref reader, typeToConvert, options); }By passing no arguments or other initialization arguments to

JsonSerializerOptions, we get the default configuration of the serializer, including the defaultValidationProblemDetailsconverter. We can’t simply instantiate aValidationProblemDetailsJsonConverter, as it’s markedinternal.The implementation of

Write()is rather more involved. The System.Text.Json API is deliberately forward-only, so we’re unable to re-use the default implementation and fix it up: we have to reimplement the logic from the default implementation. Luckily it’s relatively trivial: write a{, write the fields defined on the RFC, write theerrorsextension to the RFC with each error serialised properly this time, close theerrorsextension with a}, and write the closing}.public override void Write(Utf8JsonWriter writer, ValidationProblemDetails problemDetails, JsonSerializerOptions options) { writer.WriteStartObject(); writer.Write(Type, problemDetails.Type); writer.Write(Title, problemDetails.Title); writer.Write(Status, problemDetails.Status); writer.Write(Detail, problemDetails.Detail); writer.Write(Instance, problemDetails.Instance); writer.WriteStartObject(Errors); foreach ((string key, string[] value) in problemDetails.Errors) { writer.WritePropertyName(options.PropertyNamingPolicy?.ConvertName(key) ?? key); JsonSerializer.Serialize(writer, value, options); } writer.WriteEndObject(); writer.WriteEndObject(); }(Note: the

writer.Write()calls are a convenience extension method I wrote to make the code a little more readable; it just checks the value is not null before calling the writer’sWriteString()method.)The key bit for our requirement is line 15, where we call

ConvertName()using the suppliedPropertyNamingPolicyon the dictionary key.The full implementation then looks like this:

using System; using System.Text.Json; using System.Text.Json.Serialization; namespace Microsoft.AspNetCore.Mvc { /// <summary> /// TODO: replace by built in implementation in dotnet 6.0 /// https://github.com/dotnet/runtime/issues/782#issuecomment-673029718 /// Implementation based on https://docs.microsoft.com/en-us/dotnet/standard/serialization/system-text-json-converters-how-to?pivots=dotnet-core-3-1#support-dictionary-with-non-string-key /// </summary> public class ValidationProblemDetailsJsonConverter : JsonConverterFactory { public override bool CanConvert(Type typeToConvert) { return typeToConvert == typeof(ValidationProblemDetails); } public override JsonConverter CreateConverter(Type typeToConvert, JsonSerializerOptions options) { return new ValidationProblemDetailsConverter(); } private class ValidationProblemDetailsConverter : JsonConverter<ValidationProblemDetails> { private static readonly JsonEncodedText Type = JsonEncodedText.Encode("type"); private static readonly JsonEncodedText Title = JsonEncodedText.Encode("title"); private static readonly JsonEncodedText Status = JsonEncodedText.Encode("status"); private static readonly JsonEncodedText Detail = JsonEncodedText.Encode("detail"); private static readonly JsonEncodedText Instance = JsonEncodedText.Encode("instance"); private static readonly JsonEncodedText Errors = JsonEncodedText.Encode("errors"); public override ValidationProblemDetails Read(ref Utf8JsonReader reader, Type typeToConvert, JsonSerializerOptions options) { // Use the built-in converter from the default // JsonSerializerOptions var converter = new JsonSerializerOptions() .GetConverter(typeof(ValidationProblemDetails)) as JsonConverter<ValidationProblemDetails>; return converter!.Read(ref reader, typeToConvert, options); } public override void Write(Utf8JsonWriter writer, ValidationProblemDetails problemDetails, JsonSerializerOptions options) { writer.WriteStartObject(); writer.Write(Type, problemDetails.Type); writer.Write(Title, problemDetails.Title); writer.Write(Status, problemDetails.Status); writer.Write(Detail, problemDetails.Detail); writer.Write(Instance, problemDetails.Instance); writer.WriteStartObject(Errors); foreach ((string key, string[] value) in problemDetails.Errors) { writer.WritePropertyName(options.PropertyNamingPolicy?.ConvertName(key) ?? key); JsonSerializer.Serialize(writer, value, options); } writer.WriteEndObject(); writer.WriteEndObject(); } } } internal static class JsonWriterExtensions { internal static void Write(this Utf8JsonWriter writer, JsonEncodedText propertyName, string? value) { if (value != null) writer.WriteString(propertyName, value); } internal static void Write(this Utf8JsonWriter writer, JsonEncodedText propertyName, int? number) { if (number != null) writer.WriteNumber(propertyName, number.Value); } } } -

Converting objects to arrays in TypeScript

Lately I’ve been writing TypeScript to provision Azure resources with Pulumi. Pulumi’s a fantastic tool, building on the proven technology of Terraform to provide Infrastructure as Code with real programming languages. Many of the samples are in TypeScript using Pulumi’s TypeScript SDK, so I opted to work with this one rather than, say, their .NET SDK.

One of the key benefits of using Pulumi to my mind is being able to efficiently unit test my infrastructure’s properties, and this is something treated as a first-class concern in Pulumi’s docs—always a good thing to find!

I also opted to use this as an opportunity to learn the new AVA test runner for JavaScript, for curiosity and learning as much anything. More detail about that another time, perhaps. I had a set of standard properties for my Azure resources that I wanted to test in common, similar to parameterised tests or Theories in Xunit.net. What follows is the code I ended with sparing you, dear reader, the hours of frustration encountered along the way.

-

Options for running Docker Containers on Azure

Earlier this year I had some time off between jobs, and I used the opportunity to put some life into an idea I’ve been wanting to work on for nearly a decade now. I need to run the project on only a small budget, and therefore need to keep hosting costs down. On the other side of the coin, I really like the workflows I built around Docker, and want to continue building on those. As such, I’ve been researching options for running Docker Containers on Azure, beyond the obvious orchestration example of Kubernetes. This blog post is a

semi-not very scientific summary of what I’ve found so far. -

Writing for Software Engineers

Writing for Software Engineers

Well, tonight’s been fun. Sat out in the garden, putting together my toolchain for blogging. Ever since I first used Vue.js in 2018, I’ve been really impressed with the ecosystem, and the automated tooling provided by vue-cli, especially around things like integration with Prettier and Husky (well, Yorkie) to ensure everything is written just so. No arguments over code styles, no cognitive load from parsing inconsistently-formatted codebases, just go code. The slickness of that experience was something I wanted to replicated for my blog, so I didn’t have to worry about, e.g., line lengths, Markdown errors, etc. So, herein lies the tale of my sweet authoring setup.

-

A Complete Guide to Testing Your Software, Part 2

or, Listen to what your tests are mocking

You might remember from last time that we briefly covered the concept of Hexagonal Architecture, or “ports and adapters” as it’s sometimes otherwise known, in the context of using mocks and stubs to resolve some of the pain of integrated tests. I wanted to come at the problem from a slightly different angle for this blog post: listening to the tests we write, for what they’re telling us about our design. Let’s take a look at an example in C#:

public class Recorder { private readonly ILogger _logger; public Recorder(ILogger logger) => _logger = logger; public void Record(bool isA) { if (isA) { RecordA(); return; } RecordB(); } private void RecordA() { _logger.LogInformation("A happened"); } private void RecordB() { _logger.LogInformation("B happened"); } }This is contrived code for sure, but the overall shape is common. It might be

- a log statement in the success case or a different log statement in the error case;

- registering a discount for a purchase because it’s February and the customer was born in a leap year, or not;

- any other mutually-exclusive pair of states.

Paint your own domain over this structure, and then ask yourself the question “how do we unit test that logic”? If you answered “Mock the ILogger and expect LogInformation to be called”, then read on…

-

Patterns for SOLID code: Introduction

This is the first post in a new series I’m starting which discusses the Design Patterns I use, how, and why. The series can and possibly should be considered a companion series to the series on testing software.

I’ve long described Design Patterns as the “bricks and mortar” of software engineering, not so much in the sense that they make up the fabric of some construction, but in the sense that they’re fundamental. Some of the patterns, such as Iterator and Observer, have even become language features, only one step away from programming primitives.

It’s true to say that some of the patterns as originally described by the Gang of Four (GoF) appear complicated when viewed with the context of programming languages in 2020, but to disregard them because of that would most definitely be throwing the baby out with the bath water. A little adaptation makes them easier to implement and remember, and I’ll be sharing my revised implementations in this series.

But first, let’s look at some examples of the Design Patterns in the real world.

-

A Complete Guide to Testing Your Software, Part 1

Having recently started my new gig at Ieso Digital Health (

), I’ve had an opportunity

to reflect on the things I learned building a greenfield service using recommended good testing practices at

CloudHub360. As such, I thought I’d resume my … delayed …

series on testing software.

), I’ve had an opportunity

to reflect on the things I learned building a greenfield service using recommended good testing practices at

CloudHub360. As such, I thought I’d resume my … delayed …

series on testing software.Everything I describe in these posts is general purpose, applicable across languages, technology stacks, frameworks, and more. If you want to apply them in an existing codebase, you’re probably going to have some rearchitecting to do. If you’re working in a pure Functional language like F#, Haskell, etc., then these principles are going to take a bit of adaptation to that paradigm; if this is you, I would love to talk this through with you!

This post is a sort of TL;DR of the series as as a whole. Yes, it’s quite long, but it’s the series distilled to its essence, drawing together information from a variety of sources into a single place. If you’re after a succinct answer to the question “how do I test my software”, this is about as short as it gets. (Sorry.)

-

Changes to the Cambridge Software Craftsmanship Community

At the end of August, I sent the following message to the members of the Cambridge Software Craftmanship Community.

Hi everyone

It’s not often that we email our members, so please bear with me and read this to the end.

Recent events in the wider tech community, and reactions within the Software Craftsmanship community, have prompted wider discussions about the inclusiveness of the community, and I’ve decided to make some small but important changes to our local chapter.

My experience of the wider Software Craftsmanship community has been one of an inclusive, diverse, friendly and welcoming environment, to which I hope anyone who has attended SoCraTes events in the UK and abroad will attest. I believe we, as a community, have moved beyond the technical focus of the original Craftsmanship events like SCNA and SCUK to provide discussions and interactions that remain relevant today, and grow in value with each new voice added. I firmly believe we are a superset of the original movement, with more to offer to our members and the industry in general.

TL;DR From 1 September, we will be known as Cambridge Software Crafters, and new attendees will be required to agree to the Community Code of Conduct defined at https://communitycodeofconduct.com/. We are distancing ourselves from the recent remarks of Robert Martin.

Context

The infamous Google Memo1 written by James Damore kicked off a chain of events within the wider Software Craftsmanship community that I believe requires action at the local level to address. Specifically, Robert “Uncle Bob” Martin wrote a reaction piece to Damore’s firing, entitled Thought Police2. This was a misguided piece which many took as defending Damore and his views, and the follow-up posts, On the Diminished Capacity to Discuss Things Rationally3 and Women in Tech4 did little to resolve the situation. The latter post in particular is a poor attempt at an apology that never actually says “sorry”. He has a history of misogynist statements and essays, so this is not a one-off.

Sarah Mei, a prominent US-based Software Developer whom I respect highly and whose opinion I value enormously, posted a series of tweets reacting to Martin’s blog post5, including a critique of Software Craftsmanship and the community. Her critique is interesting and worth taking the time to read. Two things stood out for me as a community leader, though: 1) that Sarah considers the word “Craftsmanship” gendered and exclusionary; and 2) that the association with Robert Martin reinforced the view that the community is exclusionary.

I will address the second of these directly right now: Robert Martin does not speak for me, nor for the community I founded. Neither is it representative of the wider community which I have come to love for its inclusiveness and relentless attempts to improve the diversity of its membership. The views laid out in his original piece, and his poor apology, are neither opinions nor actions welcome in our community.

Community Name

Sarah’s thread of tweets set me and a bunch of other community leaders thinking and talking. There is a Slack team which you can join at http://slack.softwarecraftsmanship.org/; the discussions have been taking place in the #culture channel. After discussion with the co-organisers of the Cambridge community, we have agreed to rename the group to “Cambridge Software Crafters”, and to refer to the “craft” of software rather than to “Craftsmanship”. We will also be referring to our members as Practitioners or Software Crafters rather than Software Craftsmen. This change will take effect from 1 September.

You are, of course, free to continue referring to yourself as a Software Craftsman if you would prefer! Please adopt the new gender-neutral language when referring to others, however, to help our community be as friendly, inclusive, and welcoming as we can. A gentle reminder to your fellow community members to use gender-neutral language in our meetups will go a long way, too.

Code of Conduct

When I first started this community in ~2012, codes of conduct were barely discussed, if they had even been “invented” then. After a number of events, particularly in the US, experienced some high-profile cases of sexual harassment, sexualised imagery in slidedecks, and other inappropriate behaviour, it is now commonplace for events to implement a Code of Conduct.

I have, from the beginning, tried to build a community that is open and friendly and safe for members to attend. Early efforts focussed on filtering membership to ensure ethical recruiters who were interested in being an active part of the community were welcomed. It has, however, taken me a long time – far too long, in reality – to implement a Code of Conduct for our community, to protect our members at large. To anyone that has felt uncomfortable within our community, I am deeply sorry.

As of 1 September, the Community Code of Conduct will be in place. All new members will be required to agree explicitly to adhere to this before joining the community, and continued membership of the community will be considered a tacit agreement to abide by the code of conduct.

Whom to contact

At least one of the organisers is present at every event we run, and often are facilitating; they are myself, Amélie Cornélis, Alex Tercete, and Ally Parker. If you are made to feel uncomfortable because of the words or actions of another member, and are not able to speak up at the time, please speak to one of us at the first opportunity so we may address the issue swiftly. We are easily reachable via Meetup if you prefer online contact.

Conclusion

Thank you for taking the time to read this. I hope I have articulated clearly the reasons for making these changes. I’ll be briefly reprising this email at the next Round Table Discussion on 5 September, and am happy to discuss the changes in person or via email. You can just hit reply on this email to send me your thoughts.

All the best

Alastair

(Text licensed under the terms of CC-by-SA v4)

-

Fun with Typescript and CasperJS

I've spent the last couple of days writing smoke tests for our application with [Typescript](https://typescriptlang.org/) and [CasperJS](http://casperjs.org/), and hit quite a bit of pain along the way, so I thought I'd share what I'd learned.

-

The Anatomy of a Unit Test

This is the latest in a series of posts I’m writing to support a new online course I’m putting together for Decacoder called Testing your code like a pro. Follow me on Twitter for updates on the course.

I’ve been practising and honing my unit testing techniques for around ten years now, and one of the key realisations I hit upon a while ago is that tests are like a sort of mini science experiment. They have a stated hypothesis, and that hypothesis is either proved or disproved depending on whether the test passes or fails respectively. I’ve noticed that good tests follow a structure very similar to that of a simple science experiment, such as those practised in school. Let’s explore this in some more detail.

The Scientific Method

Wikipedia introduces the Scientific Method as “…a body of techniques for investigating phenomena, acquiring new knowledge, or correcting and integrating previous knowledge.” It can be thought of as a process for exploring a specific knowledge space, identifying the (current) boundaries and limits of that space, which landmarks signpost new areas, etc. We explore a knowledge space with experiments, the purpose of which is to “determine whether observations agree with or conflict with the predictions derived from a hypothesis”.

The Scientific Method suggests the following general structure for an experiment:

- Hypothesis: the idea or theory we wish to prove or disprove

- Method: a detailed description of how we intend to go about proving or disproving our hypothesis

- Observations: the raw results obtained by following the method

- Analysis: an inspection of the observations to determine their significance

- Evaluation: based on the analysis, a conclusion on whether we can prove/disprove our hypothesis

Let’s say for example we wish to see if bricks float in water. We might design our experiment as follows.

Hypothesis

Bricks float in water

Method

Equipment

- Standard ten-litre bucket, measuring 305mm in diameter and 315mm high

-

Standard clay house brick measuring 215mm long by 65mm wide x 102.5mm high, weighing 2.206kg

Process

- Fill the bucket to 90% capacity with 9 litres of water

- Place the brick on the surface of the water

- Let go of the brick

- Complete steps 2. and 3. above for a total of 100 trials

Observations

| Trial | Brick Floated | |——-|—————| | 1 | No | | 2 | No | | 3 | No | | … | … | | 98 | No | | 99 | No | | 100 | No |

Analysis

100% of trials resulted in the brick floating. The mean, median and mode number of times the brick floated was 0, with a variance of 0. In trial 37, it appeared that the brick was floating, but it turned out the brick had not been fully released into the water.

Evaluation

The results are conclusive: that brick did not float in the water. We can be more confident in the general case by:

- Trying other bricks

- Trying different water

Other factors which may be important include the temperature of the water, the liquid itself, and the material from which the brick is made.

There are three important consequences of the Scientific Method, namely that the experiments are reproducible, falsifiable, and provide a measure of confidence in the results.

Reproducible

Given the very detailed description of the necessary equipment and the process of collecting results included in step 2, other scientists are able to replicate our experiment independently. This is important for verification of the results: if others follow our process and replicate our results, then the confidence in those results is increased. Furthermore, attempts to reproduce the experiment can identify flaws in its design, improvements that can be made to increase confidence in the results, or areas for further areas of investigation.

Falsifiable

Not only does the documentation of the experiment allow others to replicate the experiment, it also offers the opportunity to falsify the results, i.e. to refute them or prove them incorrect. Falsification is an important concept: the experiment must be constructed in such a way as to allow for the hypothesis to be proven false. Furthermore, because the experiment is reproducible we allow the opportunity for falsification.

Confidence

Overall, the results of the experiment are expressed with a degree of confidence. This is usually expressed in a number of standard deviations from the mean, or n-sigma. For example, particle physicists use 5-sigma as their degree of confidence, meaning that if our brick floated because of chance and the experiment was repeated 3.5 million times, then we would expect to see the brick to float precisely once in all of those trials.

Structure of a unit test

The following pseudocode describes the most common testing structure:

a_descriptive_test_name_of_our_expected_behaviour() var dependency = new Dependency(); var system_under_test = new SystemUnderTest(); var result = system_under_test.action(); var expected = "expected result" assertThat(result, equals(expected));You may already be familiar with this structure, known as the Arrange-Act-Assert pattern.*

Revisiting this pseudocode sample in light of the Scientific Method, we can see that this pattern closely matches the one described there. Our test name is our hypothesis: we are stating something about the system which the test will prove correct or false.

The test function itself is the method. In the Arrange phase, we describe the equipment we need, set it up appropriately, and prepare the system under test for the experiment. The Act phase is actually carrying out the experiment for one trial, and the Assert phase is recording the result of that trial. Build servers can give us an insight into our tests’ success and failure rates, providing a level of analysis, from which we can evaluate the data to draw our conclusions about the system under test (or perhaps the quality of those tests).

Beyond the parallels with the Scientific Method, this structure provides us with a number of additional benefits. Following this structure as a convention allows us to more quickly understand a suite of tests we haven’t previously (or even recently) encountered. Furthermore, the implication of isolating tests from one another is similar to the concept of avoiding contamination in science experiments.

Test Names

There are two hard things in Computer Science: cache invalidation, and naming things. – Phil Karlton

If our test names are our hypotheses, then suddenly it’s clearer why names like

test1,doesItWork,test_my_object,test_bank_transfer, etc. are unhelpful: they simply tell us nothing about the system under test, the context in which its operating, or, well, anything. A good test name, on the other hand, is highly specific; for examplewithdrawing_an_amount_from_an_account_should_decrease_the_accounts_balance_by_that_amount. Note also that important wordshouldin there: this sets the tone for the entire test name; without it, or some equivalent word such as “must” or “will”, we cannot adequately describe the sentence as a hypothesis.Test Size

Tests are best kept small and focussed. Rigorously applying the Single Responsibility Principle to your tests — i.e., that test functions should only test one hypothesis — keeps our tests small and focussed. Scientific experiments don’t try to (in)validate more than one hypothesis at a time, and neither should our unit tests. A maximum of 10—15 lines per test function is best; more than this and the amount of work the test does becomes difficult to reason about.

A helpful rule of thumb for measuring how many things are being tested is the number of assertions that the test contains. If it contains a single assertion, then you can be certain it is testing only one thing; however, multiple assertions can still be ok if they form a single semantic or logical assertion. For example, for a system transferring an amount of money from one bank account to another, you may wish to write two assertions to prove that both accounts are updated correctly.

Independence

Finally, it is important that both tests and test runs are independent of each other: one test should not interfere with another, and running the same test multiple times over an unchanged piece of code should always produce the same result. Tests that influence each others’ results are equivalent to contamination in a science lab: they result in anomalous observations, or results that are just plain wrong.

Just as contamination is usually the result of the influence of some external system (e.g., being exposed to the air), so it goes with unit tests. The network, file system, and database are all obvious contaminating systems; dates/times and random number generators are less-obvious contaminating systems that are no less problematic. It is important to introduce sensible abstractions for your application to ensure that your unit tests execute in a cleanroom environment free from external influence and the information we glean from our tests is not affected by things we cannot control.

Conclusion

We’ve looked at the Scientific Method, and an example science experiment based on this method, drawing out the key repercussions of the method of reproducibility, falsifiability and confidence. We’ve drawn parallels between the structure of a simple science experiment and the Arrange-Act-Assert pattern recommended for unit tests. We talked about the Scientific Method as an act of exploration in a knowledge space, and we can view writing unit tests, particularly in a test-first style, in a very similar way.

In the next post in this series, we’ll be looking into the different types of test doubles.

–

* The Given-When-Then convention favoured by implementations of Gherkin, such as Ruby’s Cucumber and .NET’s SpecFlow, maps directly to these three steps.

-

Desire Lines in software architecture: what can we learn from landscape architecture?

Before Christmas I was talking with Simon about an architectural approach we’d taken on a recent project. The aim of the project is to replace an existing WinForms user interface with a shiny new HTML and JavaScript version. Part of this involves making HTTP requests back to the “engine” of the product, a .NET application, and of course our chosen data format is JSON. To protect the HTML UI from changes made in the Engine, we decided to keep a separation between the models we transferred over HTTP (what we termed Data Models), and the models we used in the application (or, “Application Models”). The approach that we took had similarities with the concept of Desire Lines from landscape architecture, as described in Practices for Scaling Lean and Agile Development by Larman and Vodde.

-

Updates to Bob, v0.2 released!

Recently [I introduced a new library](http://codebork.com/2014/03/23/introducing-bob.html) to aid testing in C#, [Bob](https://github.com/alastairs/bobthebuilder). I've made a couple of updates to it recently, one small, and one a bit larger. The latest release tackles a few robustness issues and sees it move out of alpha phase and up to version 0.2! [Updated: 2014-04-12 16:00 GMT]

-

Introducing Bob

Test Data Builders are awesome and you should use them to tidy up your test code (read about them in GOOS Chapter 22). I'm introducing a new library called [Bob](http://github.com/alastairs/BobTheBuilder) which replaces the need to write your own hand-rolled Test Data Builders with a generic solution that preserves the fluent syntax suggested by GOOS.

-

Software Craftsmanship, and Professionalism in Software

Another thing to come out of my reflections on Software Craftsmanship at SoCraTes UK this weekend was a clearer view on what professionalism means to me for software craftsmen. I believe there are three pillars of professionalism that need to be considered:

- A mindset of taking a methodical, deliberate, and considered approach to your work;

- Leaning on your tools, but not being dependent upon them; and

- Taking personal responsibility for your decisions and actions

-

A Plurality of Ideas: Contrasting Perspectives of Software Craftsmanship

Attending SoCraTes UK over the weekend got me thinking about Software Craftsmanship in general, and the different perspectives within the movement was one of those things, particularly the differences, as I perceive them, between the North American and the European schools.

-

SoCraTes UK 2013

This last weekend I attended the [SoCraTes UK conference](http://socratesuk.org) at the Farncombe Estate in the Cotswolds, near Moreton-in-Marsh. It was a fantastic weekend of mixing with, talking to, and learning from other software craftsman from around the country and across Europe.

-

Automating TeamCity (Part 2 of n): Automating XenServer

Recently at work I have been working on a project to build out a new TeamCity installation on a small farm of servers. Having drawn some inspiration from Paul Stack, I knew that leveraging a virtualised environment could buy us some big wins in automating many aspects of the new TeamCity environment. This post continues a series of posts that will describe in some detail what I set out to achieve, why, and how I did so.

Read previous posts in this series:

-

Automating TeamCity (Part 1 of n)

Recently at work I have been working on a project to build out a new TeamCity installation on a small farm of servers. Having drawn some inspiration from Paul Stack, I knew that leveraging a virtualised environment could buy us some big wins in automating many aspects of the new TeamCity environment. This post begins a series of posts that will describe in some detail what I set out to achieve, why, and how I did so.

Motivation

At work, we currently have a number of TeamCity installations, ranging from the very small to somewhat larger: our smallest installation consists of a TeamCity server and a build agent all installed on a single machine, whilst the largest consists of a TeamCity server on one machine and a mixture of physical and virtualised buildagents to a total of eight agents. The initial motivation for the project was to consolidate these environments into a single larger environment to reduce administration overheads and remove silos of builds and their artefacts.

I had previously done some work to create a Virtual Machine (VM) template for the build agents in our main TeamCity installation, with some success: setting up a new bulid agent from scratch used to be a day’s work, and with the VM template that time initially came down to 10 minutes or so. Unfortunately, the template was a little fragile in the way it had been set up: for example., a PowerShell script that ran on boot would not always run, and the use of an answer file for Windows setup turned out to be a poor choice. Furthermore, over time the template only became more out of date as we made changes to our product and infrastructure, particularly in moving from Subversion to Git. It was still much faster to create new build agents than it used to be, however, not least because we didn’t have to wait for an old developer machine to become available, and when we bought another five build agent licences a couple of months ago, I was able to get the new VMs set up from this template in a few days.

We also wanted to set up a new domain on an isolated subnet for the new TeamCity infrastructure. We have approximately 25000 tests in our main product, and sadly many of these are poor tests: in particular, there is a large number of tests that hit the underlying database for our product, and some hit the company’s Active Directory. Coupled with the network problems we have historically suffered (thankfully now mostly resolved!), it is sadly not uncommon to see spurious test failures because some component of these system tests could not be contacted. By placing TeamCity on its own domain, isolated from high traffic of the main network, it is hoped that these tests will become more reliable.

After talking with Paul months ago, and in feeling some of this pain, I realised that putting everything into the template was not a sensible decision, and something more configurable was required. Paul had described how he had scripted the configuration of his build agents, to the extent that he could click “Run…” on a particular build configuration in TeamCity, and have a new build agent ready a few minutes later. This sounded like a really cool thing to be able to do, and coupled with a requirement from my colleague Adrian, namely that we be able to regenerate the build agents on a weekly or monthly basis to keep them clean and free of cruft, Enter the power of automation.

The Plan

A plan was forming in my mind. Onto each of the five new servers we had bought for this purpose, I would install Citrix XenServer, an Enterprise-class Server Virtualisation product I was familiar with from my time working at Citrix. The servers would be joined together into a pool so that VMs could freely move around them as necessary. We would need some form of shared storage for the XenServer pool to store its VM templates.

The VM templates themselves would be very slim: the minimum required software would be installed. For a basic build agent, this meant Windows Server 2012 plus the TeamCity Build Agent software. Further templates would be required for .NET 4.0 build agents and .NET 4.5 build agents, so that they could include the appropriate Windows and .NET SDKs. I would be very strict about what could be installed onto a build agent template and what could not, and pretty much everything could not.

Using the might of PowerShell, we would script the installation of dependent software onto a new build agent VM; Chocolatey (built on the popular NuGet package manager) would be of use here. Ideally, we would script the configuration of the build agent machine as well: set the computer name, join it to the domain, and more.

The final piece of the puzzle was TeamCity itself. We would need to set up a set of build configurations to automatically delete and re-create all the build agents currently running, and to allow for the creation of a single new build agent. We would also use this as the NuGet package source for our custom Chocolatey packages.

List of Tools Used

- Citrix XenServer 6.1 (free edition)

- FreeNAS (shared templates over iSCSI)

- Windows Server 2012

- PowerShell 3.0

- PowerShell SDK for Citrix XenServer 6.1

- Chocolatey

- and, of course, TeamCity

Part 1 Wrap-up

Hopefully this post has made clear the motivation for this project, and some of the requirements involved. When we set out, I was confident that, although the end result sounded more than a little utopian, the goals could be achieved. I’m glad to say that with a lot of research and tinkering, and hard work to get the bits working in production, it is possible to achieve that utopian state, and it’s pretty cool to see it happening. The detail of the solution will be covered in future posts.

-

Cambridge Software Craftsmanship Community: the next phase!

Three months ago, I founded the Cambridge Software Craftsmanship Community, and I am pleased to say that it has grown well over that period: we now have nearly 60 practitioners in our community! On Tuesday, we will be holding our third monthly Round-Table Discussion at Granta Design, but this is not what I want to talk about now. I want to talk about the next phase of the community’s development.

As I laid out in my introductory post, phase two of my plan is to introduce the hands-on sessions that are so popular at the London Software Craftsmanship Community, and this phase begins this September. On 18 September, we will hold our first hands-on session at Granta Design. This will take the form of an introduction to TDD, with the intention of providing a mentoring component as part of the exercise. Craftsmanship exercises are paired, and implemented using the practice of TDD, so it is my hope that this session will provide an introduction to the format for future sessions, as well as in some cases introducing people to a new skill. We will pair experienced TDD-ers with less-experienced TDD-ers and work through a relatively simple kata, such as the Roman Numerals kata.

I am also very pleased to announce that our second hands-on session will be delivered by Sandro Mancuso, founder of the London Software Craftsmanship Community, at the end of October. The details of the session are still to be finalised and announced, so keep an eye on Twitter (under the #camswcraft hashtag) and the CSCC’s Meetup site for updates.

It’s a really exciting time for the Cambridge Software Craftsmanship Community. I feel really privileged to be a part of it, and continue to be humbled by the feedback that I get. If you’re looking for a group to discuss and improve software development with other developers who want the same, check us out. We think you’ll like it.

-

My American Tour, Part 1: San Francisco

Introduction

As some of you know, my brother Toby got married in San Francisco at the beginning of May. Congratulations to him and Sera! As I’ve not had many opportunities to visit the US in the past (I’ve only been once before), I thought I would take this opportunity to make a proper trip of it, and so started mapping out what turned out to be not one but two holidays of a lifetime.

In something of a departure from my usual topic, I hope this short series of blog posts will serve as a reminder to me of the good times I had in the US, and jog my memory of all the awesome places I visited. I hope you enjoy reading these posts too!

My tour went as follows:

- 1-5 May: San Francisco

- 6-8 May: Yosemite National Park

- 8-10 May: Highway 1

- 11-13 May: Boston

- 14-17 May: New York

- 17-19 May: Washington, DC

1 May: Departure and Arrival

I believe the best holidays begin with an early start. Anywhere that requires you to get up before you would normally get up for work must be worth going to, and anywhere that requires you to get up before the sun has been bothered to get up doubly so. As it turned out, I was up at 4.00 to catch a train down to London to catch my flight. In a last-minute change of plan, I had decided to drive myself to work and leave my car there; this turned into a last-minute panic, when I realised that I’d only left 30 minutes to drive to work and walk to the station. And so, on that cold and rainy morning I found myself hurrying over the Cambridge Railway footbridge with three weeks of luggage in tow, hoping against hope that I wouldn’t miss my train. Luckily, all was well: I made the train with a few minutes to spare, and the Piccadilly line connection from Kings Cross to Heathrow Terminal 5 could barely have been smoother.

I met up with Toby and Sera, my family (Mum, Dad and Aunt), Sera’s Mum and a couple of Sera’s friends after checking my bags, and we headed through security. After a stop at Wagamama for some breakfast (they do really tasty omelettes!) we eventually managed to board the plane, a comfortable BA Boeing 747, which would be our home for the next 11 hours or so.

I’d heard a few bad things about BA, so I’ll admit my expectations were quite low. However, they were very good at looking after us throughout the flight, and provided and excellent selection of in-flight films. I managed to catch up on some films I’d recently missed at the cinema, including J Edgar, The Artist, and Hugo.

When we landed in San Francisco, it was 2.30pm local time and 10.30pm body-time. We got through a long queue for passport control, found our bags and flagged down a cab. Unfortunately, Toby and Sera’s pre-booked cab didn’t show up, but they thankfully managed to sort it fairly easily. We went our separate ways: Toby and Sera and their friends had booked an apartment near Alamo Square, whilst I’d booked into the excellent Hotel Rex just off Union Square. This quaint little hotel is done out in Art Deco styling, with a small bar that doubles up as the breakfast room. After doing a little exploration of the room and a bit of unpacking, I went in search of an early dinner with Mum and Dad. The concierge recommended Fino, a lovely authentic Italian restaurant on Post St, just a couple of blocks from the hotel. After a couple of very tasty courses, we realised we were completely shattered from the jet lag, and stumbled back to the hotel for a very early night. The adventures would start for real tomorrow!

2 May: City Tour, Golden Gate Bridge, Dinner at The Cliff House

After waking up a couple of times in the night (an 8-hour time difference is a bitch, however knackered you are when you go to sleep), I headed down to the Library Bar for some breakfast. The Californian-style breakfast proved to be something of a feast: fresh fruit (strawberries, two types of melon, pineapple), yoghurt, tea, coffee, toast, bacon, eggs, sausages, pancakes/French Toast, Californian-style potatoes… I ate like a king every breakfast in California! Also, I discovered by accident that scrambled eggs and maple syrup go together surprisingly well, in moderation. The Californian-style potatoes were also very tasty: roasted up with peppers and spices.

Union Square

I left with my parents and my aunt to catch a City Tour bus (same chain as the open-top red buses in London, as it turned out) later that morning, which took us on a good route around the city from Union Square out to the Golden Gate Bridge and back again later. The route took in some of the famous parts of the city, such as Alamo Square, Haight-Ashbury, Golden Gate Park and Bridge, City Hall, etc. It was a windy day (it’s always windy in San Francisco, from what I can tell), and we were on the top deck of the open-top bus without much in the way of warm clothing, etc., so we decided to get off at the Golden Gate Bridge and take some photos:

View of the full span of the Golden Gate Bridge, taken from the San Francisco side

View of San Francisco from the far side of the Golden Gate Bridge (pictured)

We returned on the tour bus, and met up with Toby and Sera and their friends after a spot of lunch to see how they were doing. We then returned to the hotel to freshen up and change for dinner; e had booked a table at Sutro’s at The Cliff House, which overlooks the Pacific. The building used to be a public baths, the pools seemingly filled by the Pacific itself. The table was booked for dinner through sunset, and both the food and the views were just stunning.

3 May: Exploratorium, Fisherman’s Wharf, The Aquarium of the Bay, Alcatraz

The following day, we headed out to the Exploratorium, a fantastic hands-on science museum/exhibition on the edge of the Presidio. We easily killed three or four hours there playing with the different exhibits, and some of the most interesting ones were to do with the various behaviours of light in different circumstances (e.g., refracted light, polarised light, etc.). One of the cool things the Exploratorium does is to mix art and science, perhaps because of its proximity to the Palace of Fine Arts, which is right next door.

The Palace of Fine Arts, next door to the Exploratorium

At lunchtime, we wandered over to Fisherman’s Wharf, and stopped at the In ‘n’ Out burger there, on the recommendation of Paul Stack. It wasn’t quite what I was expecting, given that it’s a fast-food chain, but it was about as far away from McDonald’s as you can imagine, and the burgers were damn good.

In the afternoon, we had a little wander around Fisherman’s wharf, where we encountered some sea lions, and paid a visit to the Aquarium of the Bay, featuring a number of the species that can be found in San Francisco Bay. The moon jellyfish and nettle fish were particularly impressive, and I found Nemo!

Sea lions

Moon jellyfish

Nettle fish

A clownfish. It may or may not be called Nemo

A blue tang

Anchovies

After the Aquarium, my parents and aunt returned to the hotel, and later that evening, I embarked on a night tour of Alcatraz. I have to say, I was very impressed with this: the audio commentary, featuring ex-staff and -inmates of the prison was thorough and always interesting, if initially a little confusing as I struggled to get my bearings at the start. The tour was immensely atmospheric, as the sun set over San Francisco whilst the tour was underway, and twilight fell on the cellblock.

The Alcatraz cell block at night

The “shows” put on by the park rangers (Alcatraz falls under the National Parks Service’s repsonsibility) were very informative. I attended two of the available shows: “The Birdman of Alcatraz”, a talk about the life of Robert Stroud and his time at Alcatraz, and “The Sound of the Slammer”, a demonstration of the cell doors. The doors were hooked up to a really interesting manual mechanism, utilising a gearbox and double clutch. From the one console, the operator could opt to open all the cell doors, all the odd/even ones, open only selected cells, or open all but selected cells. The doors were opened and shut using a lever: pull down and push up to open or shut the doors.

Broadway. (That’s really what it’s called.)

A utility corridor between the rows of cells

By the time the boat docked back at Pier 33 in the Embarcadero, I was pretty knackered, so I caught a streetcar back to Union Square and walked back to the hotel.

4 May: The Wedding!

It was starting to look like I was finally over the jet lag, which was a relief: today was the big day, and the real reason I was in the US at all!

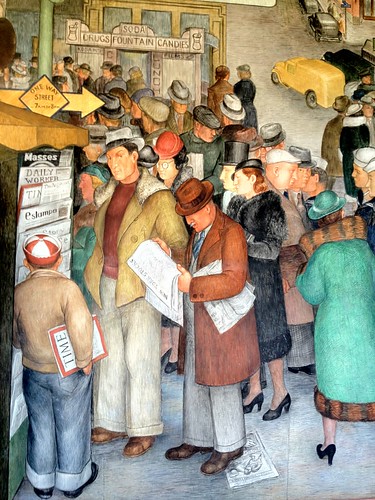

In the morning before the wedding I took a trip on one of the cable cars to Telegraph Hill for a trip up Coit Tower with Mum and Dad. The tower was built with money from Lillie Hitchcock Coit’s estate, which had been bequeathed to the city for “beautification”. Lillie Hitchcock Coit was a wealthy socialite, who enjoyed chasing fires with the city’s fire brigade. Built in the art deco style, the tower stands 210 feet tall on one of the highest points in the city. The ground floor of the tower is covered in murals, completed in a semi-cartoon style and depicting aspects of San Francisco life.

A San Francisco Cable Car

An example of a Telegraph Hill residence, near the Coit Tower

Part of the mural running around the base of the Coit Tower

A view of San Francisco from the top of the Coit Tower

A view of the Golden Gate Bridge and Marin County from the top of the Coit Tower

We walked back down Telegraph Hill via Filbert Street, a stepped walkway down the hill with lots of lovely houses set apart from the city, and found ourselves in Levi’s Plaza, home to the world headquarters of Levi Strauss Jeans.

A cottage on Filbert Street

Levi Strauss & Co. Head Office

Levi’s Plaza was a really nice green space, with a stream and seating and lots of lovely sunshine, and the office buildings were interesting to look at. It must be great working there.

A San Francisco Street Car

We caught a street car back to Fisherman’s Wharf for a spot of lunch at Boudin, a San Francisco Sourdough bread-maker. They have a really interesting museum on-site that delves into the history of the area, as well as the history of the Boudin company and the sourdough bread.

Sourdough bread is really tasty; it has a slight tang to the taste (hence the sourdough name), and is often baked in the French stick (baguette) and bap styles. Boudin’s signature sandwich is a clam chowder bowl, where the bowl is made from sourdough bread.

Running a bit late, we rushed back to the hotel on another street car (as much as one can rush on those things!), and got ready for the wedding. Soon we found ourselves outside the beautiful San Francisco City Hall waiting for the rest of the wedding party to arrive.

San Francisco City Hall front entrance

We headed indoors to wait for them, and were again stunned by how beautiful the building was. A number of other weddings were running at various points around the building.

Another wedding party in the foyer

Part of the foyer, looking towards the North Light Room

To the north and south of the central foyer are two Light Rooms, with frosted glass ceilings. The light in here is much brighter than elsewhere in City Hall, and is very warm as well. The North Light Room is home to the Hall’s café, whilst the South Light Room houses some memorials to the architect, the city, and the nation.

With the rest of the wedding party now arrived, we headed up to the fourth floor for some photographs and the ceremony. The ceremony was over surprisingly quickly: we didn’t have any of the hymns, readings, or other items you get with a church wedding, so there was little more to it than the vows. However short it was, though, it was a very sweet and fitting ceremony for Toby and Sera, and I’m hugely proud of my brother :-)

The ceremony: short, but very sweet

After the ceremony, the photographer took the happy couple off for some photos and the rest of us went in search of a drink and headed outside to flag down the wedding transport. The bus took us on a bit of a tour around the city, through Alamo Square and Haight Ashbury amongst other places, and finishing up in Golden Gate Park outside the de Young museum and the California Academy of Sciences at the eastern end of the park. The journey was accompanied by a soundtrack of 1960s music, a bottle of Francis Ford Coppola’s Blancs de Blancs sparkling wine, Sofia, and some delicious cake pops (mine was red velvet, nom!).

The wedding transport: a cable car-style bus!

The photographer took Toby and Sera off for some more photos around the park, and Mum and I went to see if we could get into the Jean Paul Gaultier exhibition at the de Young museum; unfortunately it was too late in the day, so I went for a wander around the immediate area before catching a cab back to the hotel with my parents and my aunt.

A fountain in the plaza between the de Young Museum and the California Academy of Sciences

The de Young museum had on an exhibition of Jean Paul Gaultier whilst we were there

The wedding reception was held in one of the function areas at the wonderful Foreign Cinema in the Mission district of San Francisco. The restaurant’s entrance is styled as a classic cinema, and the lobby area is a long corridor decked out with a red carpet, and as the sun goes down, the outside dining area at the back of the restaurant is treated to a projection of a foreign film to accompany their dinner. Seated at a large table on the upper floor, we were next to the projectors used for the films so enjoyed the fantastic atmosphere and the films at the same time! The food was really excellent too, so I highly recommend it if you find yourself in San Francisco.

5 May: California Academy of Sciences

On our final day in San Francisco, we returned to the eastern end of Golden Gate Park to visit the California Academy of Sciences (CAS). This was a full day out and more than worth the visit! Our day at CAS started with a visit to the Morrison Planetarium, as a show was beginning about 15-20 minutes after we arrived. This is the largest all-digital planetarium in the world, and runs an interesting program of films; the one we saw was Life: A Cosmic Story (trailer), narrated by Jodie Foster.

CAS is divided into a number of sections, based on geography and scientific subject. They had penguins!

Penguins at CAS

The basement of CAS is given over to an enormous aquarium that I sadly didn’t get to peruse as completely as I would have liked (although, having visited The Aquarium of the Bay just a couple of days earlier, I was not as disappointed as I might otherwise have been). One of the best exhibits in the aquarium was this display of Moon Jellyfish:

And they had a huge tropical fish tank as well, with a large curved wall given over to displaying them:

The tropical fish tank at CAS

One of the main exhibits at CAS is a large (i.e., three-storey) biodome, which mirrors the makeup of rainforests around the world. As you walk up the ramp and through the levels of the biodome, you experience the flora and fauna at each of the levels of a rainforest.

The biodome at CAS

Lizard!

We rounded off the day with a bite to eat at the Top of the Mark, a bar on the top floor of the Mark Hopkins Intercontinental hotel. The hotel is 17 stories high, and is sited at the top of the hill in Downtown San Francisco. You get some fantastic views over the city from that height, the food was good too!

The entrance to the Mark Hopkins Intercontinental

The front of the Mark Hopkins Intercontinental

A trio of croque messieurs

Green tea and mango tiramisu

The view out to the Bay

Closing thoughts

I was initially a bit ambivalent towards San Francisco, but it grew on me and I came to appreciate it for the fantastic city it is; I certainly left wanting to go back. The people are so very friendly, and the city is a really wonderful place to be. It has a few run-down areas (the bit of the Mission district I saw was one, and the Tenderloin district is supposed to be the rough bit of town), and a huge problem with homelessness, but it generally feels like quite a safe city, and is a great place to spend some time.

-

Review: Gibraltar

A couple of weeks ago, I was invited by Rachel Hawley to take a look at the latest version of Gibraltar, a real-time logging and error analysis solution. Gibraltar was recently updated to v3.0, and I’d been meaning to look at it in more detail for GiveCRM for a while, so figured this was an opportunity I wanted to take up.

-

Starting out with RavenDB

A while back, I volunteered to re-do the Cambridge Graduate Orchestra’s website, to bring it up to date with the latest web techniques. To provide persistence, I opted for RavenDB, a “second-generation document database”, as I had heard how good it was for rapid development and what a nice API it has. These are my initial thoughts having wired it into my existing solution.

-

Project Kudu: Git deployment for all!

What is Project Kudu? Quite simply, it’s the new Continuous Delivery and Deployment hotness in the .NET world. I’m frankly amazed more people haven’t yet jumped on this, because this has the potential to revolutionise how people do deployments internally and externally. Project Kudu is the framework underlying the Git deployment feature of the new Azure Websites, but you can use it separately from Azure, and, best of all, it’s open-sourced under the Apache 2.0 licence!

-

Azure Websites: To the Cloud!

When Scott Guthrie and team unveiled the new Windows Azure updates back at the beginning of June, one of the most trumpeted features was the new Azure Websites feature, which brings to the Azure platform a service provided by AppHarbor and Heroku (for the Rubyists): simple hosting of websites on a cloud platform. One of the killer features of these platforms, and now Azure Websites, is simple deployment to the cloud via git push. AppHarbor in particular does a good job of this in the .NET space, building your project and running the tests before deciding whether or not to deploy that commit. They have some serious competition from Azure now, though.

- Older posts